Debugging Errors in SSIS Data Quality Services Cleansing Component

As part of Microsoft’s push to include business users in the Business Intelligence space, the addition of Data Quality Services to SQL Server’s feature set opened up the ETL process to the people who, arguably, know the data best. Integration with SSIS was a great move, meaning that this user control extends to automated processes, further closing the gap between data and the business.

However, like most new components, Data Quality Services has some teething problems, and these can be quite hard to find when debugging your SSIS packages. Here’s a quick tip that should help solve some of those tricky to find DQS issues.

What is Data Quality Services?

Data Quality Services (DQS) was added in SQL Server 2012 as a feature to allow knowledge base curation for data cleansing and matching rules. Available in the Business Intelligence and Enterprise SKUs, Data Quality Services works (optionally) in tandem with Master Data Services to allow business users to control and shape how data should be handled and validated for business use.

As well as a standalone Data Quality Client application, Data Quality Services also integrates with SQL Server Integration Services (SSIS) to enable the use of your user-curated cleansing and matching rules during your automated Extract, Transform, Load (ETL) processes. In effect, this give the very people who understand the data the ability to shape the Transform stage of ETL, something that would traditionally rely on developers and architects to find out this information from users and implement it during build.

Data Quality Services’ Standard Error

Running DQS cleansing and/or matching as part of an SSIS package is extremely handy, but can be difficult to debug, even with the improved logging and management offered by the SSIS Catalogue.

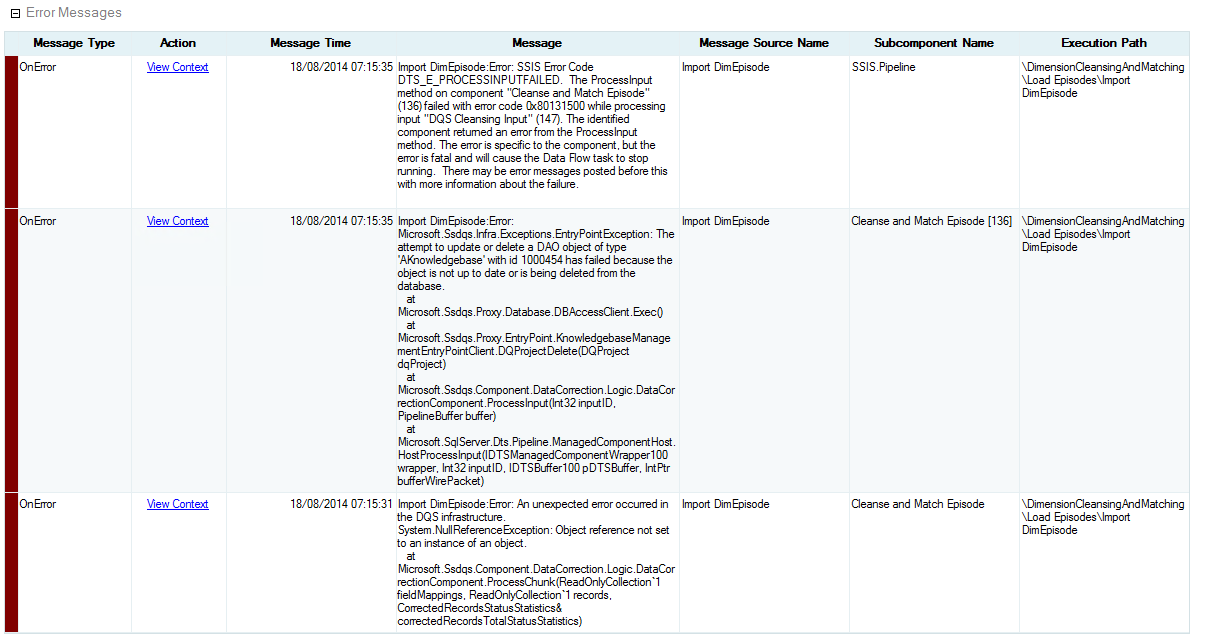

In my packages, whenever there was an issue I found that my SSIS logs always showed an error similar to this one:

Microsoft.Ssdqs.Infra.Exceptions.EntryPointException: The attempt to update or delete a DAO object of type 'AKnowledgebase' with id 1000454 has failed because the object is not up to date or is being deleted from the database.

This generally proves to be somewhat of a red herring, and actually seems to be a separate issue with the way that DQS handles an unexpected error. Rather than falling over gracefully, it seems to cause this additional exception. More often than not, this would be preceded by a NullReferenceException, which will be very familiar to the .NET developers out there.

Data Quality Services Log Files

To get more detail on the actual root cause of the error, you can use Data Quality Services’ built-in log files. These can provide a more accurate error message and stack trace, allowing you to properly deal with the issue.

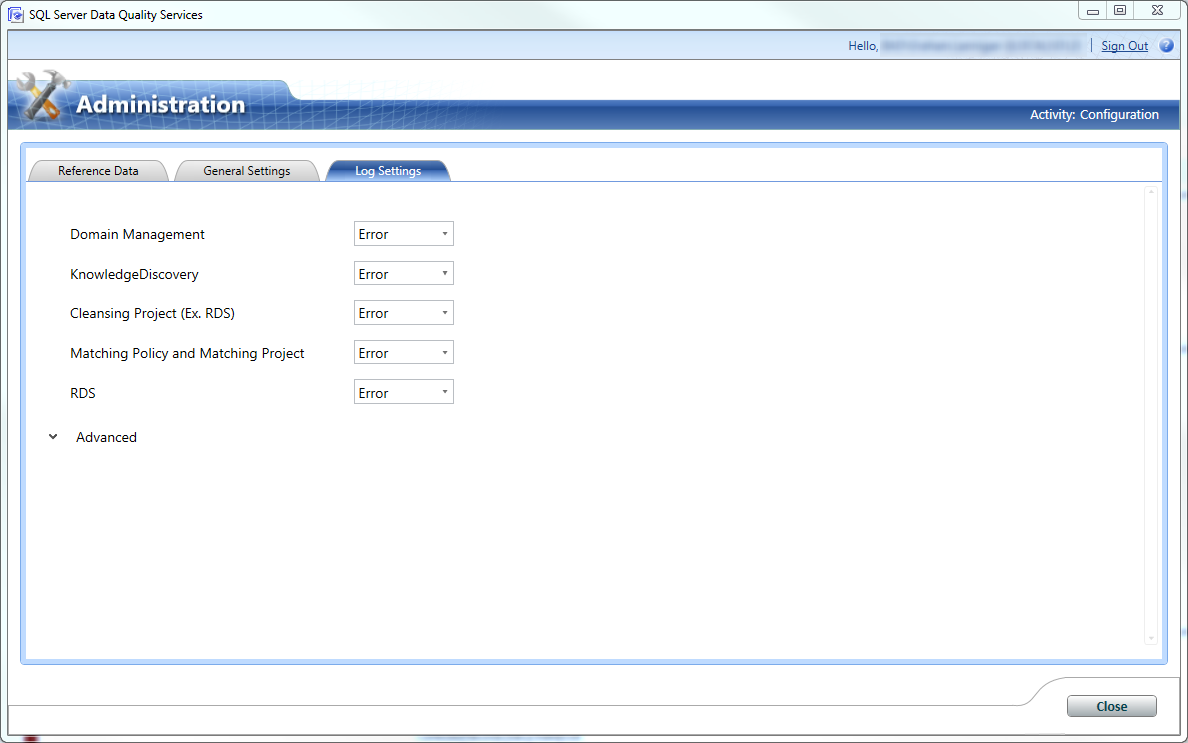

Browse to the Log Settings tab in Configuration to change the logging level.

To enable detailed logging, open your Data Quality Client, and go to the Configuration page. From there, click the Log Settings tab, and change the Cleansing Project drop-down to Debug.

This will enable a more detailed logging level which should capture the full context of any exception. Then just run your SSIS package, and following failure, browse to your Data Quality Services log folder and check out the DQServerLog.DQS_MAIN file for details.

The default location is:

C:\Program Files\Microsoft SQL Server\MSSQL11.INSTANCENAME\MSSQL\Log\DQServerLog.DQS_MAIN

If you’ve run into trouble with Data Quality Services components in SSIS, or have some additional tips for stability or debugging, please leave a comment below.