6 Tips for developing with AWS Lambda Functions

AWS Lambda functions are getting a lot of press right now, with “serverless” being the topic de jour. We’ve been using it extensively and it’s incredibly flexible and useful, although not suitable for every situation. It really does remove a lot of obstacles for the average developer. In saying that, there are a few tips that I’d like to share based on our experiences with Lambda. Many of these may be no-brainers, but it’s always worth sharing these things as you never know when they’ll be useful.

1 - Don’t implement your own logging

We always build logging into our applications (who doesn’t), so when we started with Lambda we built in some logging libraries to log to CloudWatch, the monitoring and logging service offered on AWS. These would log to CloudWatch at various points in their execution. We very quickly started getting “rate limit exceeded” errors, and noticed that our Lambdas were running really slowly. A little digging around on Amazon’s documentation shows this sentence:

Lambda automatically integrates with Amazon CloudWatch Logs and pushes all logs from your code to a CloudWatch Logs group associated with a Lambda function, e.g.

/aws/lambda/{function name}.

That’s right. You don’t need to explicitly call CloudWatch via the API to publish your log messages. All this does is slow everything down and eat into your global AWS API request limit.

You simply log to the standard output, e.g.

Console.Write()

Lambda handles the rest for you, writing your logs out to a CloudWatch Log Group that it creates for you. Handy.

2 - Learn how to interpret CloudWatch logs and metrics

Those CloudWatch logs that I just mentioned? Yep, get familiar with those. Also go into the metrics section and explore the available lambda metrics. These will be crucial for debugging your functions and keeping an eye out for any issues. You can (and should) also define alarms on the lambda metrics so that you can alert when something goes wrong, or even launch another suite of functions to take corrective action.

AWS have added a lot of extra supporting services to support observability, with X-Ray in particular being extremely valuable for serverless.

3 - Create Dead Letter Queues in SQS or SNS

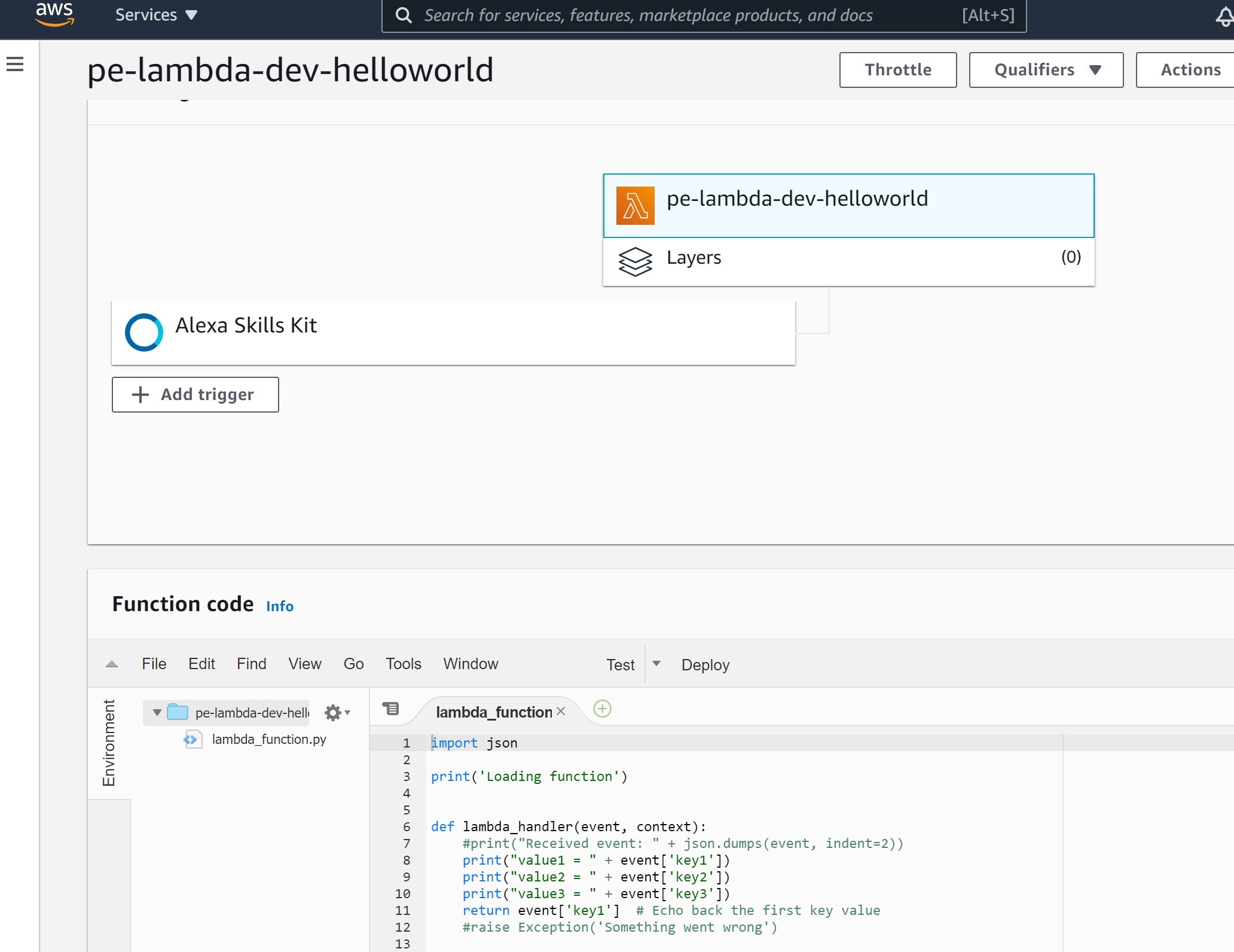

Because Lambda is serverless, you have no box that you can log into and run the application, or poke about to figure out what went wrong. You’re completely reliant on your logging and CloudWatch metrics to debug your Lambda functions, as well as the very handy Test Message functionality that lets you configure standard test data that you can use to perform integration and regression testing on your functions.

But, when it does go wrong there’s no retry button. Lambda is event-driven, which means that once that event has fired, it’s gone. If your Lambda fails and you don’t handle it, you’ve lost that event.

Fortunately, AWS lets you define Dead Letter Queues for this very scenario. This option allows you to designate either an SQS queue or SNS topic as a DLQ, meaning that when your Lambda function fails it will push the incoming event message (and some additional context) onto the specified resource. If it’s SNS you can send out alerts or trigger other services (maybe even a retry of the same function - although watch out for infinite loops), or any combination of the above, given its fanout nature. If it’s SQS you can persist the message and process it with another service.

There are a lot of options with this, but please check out this post comparing SQS vs SNS Dead Letter Queues for further information.

In addition to Dead Letter Queues, there are also Lambda Destinations, which will forward the event and function result to a destination of your choosing (SQS, SNS, EventBridge, etc.) on either Success or Failure.

4 - Estimate how many ENIs and IPs you need and request a limit increase if necessary

This one can be a big gotcha if you’re not careful. If your Lambda function needs access to any resources out on the Internet, or contained in a VPC, the Lambda function (or more correctly, the container within which the Lambda function executes) will need a network interface and IP address. How many IPs you have available will depend on your subnets and how you’ve divided up your CIDR ranges. But ENIs (Elastic Network Interfaces) are limited to 5,000 per Region by default (it used to be 350, which we slammed right into on a couple of occasions). Fortunately Amazon seem to be pretty open to extending this quite dramatically, but you need to put in a request to increase the limit.

Plan your Lambdas and your VPC design in advance, and identify if your Lambdas need access to Internet or VPC resources, and if so, work out what your concurrency will be and how many IP addresses and ENIs you’re going to need.

5 - Use your environment variables and/or additional data store (e.g. DynamoDB)

Everybody knows not to include configuration settings in your code. Fortunately, Lambda provides a built-in way to pass configuration data to your function: Environment Variables. These are simple Key-Value pairs that you can define when you deploy and configure your function in order to pass in configuration data. Alternatively, if your config is a little more involved, or you need to maintain session information or other state data, you can use a different data store to hold this data. We’ve used DynamoDB, although you could just as easily store this data in Systems Manager which has a parameter store that can be accessed by Lambda.

6 - Tweak your config

By default, Lambda allocates 256MB RAM to your function, and scales other resources (i.e. vCPU) accordingly. From your CloudWatch logs you can see how much memory your function is actually using, so it makes sense to go back to the Lambda configuration and tweak this setting to an appropriate level. This will save your costs in the long term.

Likewise, if your function doesn’t need to execute for more than 20 seconds, change your duration timeout to an appropriate value. The maximum is 15 minutes, but if you don’t need this, don’t select it.

Summary

So, nothing majorly groundbreaking in there, but a handful of tips gleaned from working with Lambda over the past couple of years that might be helpful to anyone still finding their way in the relatively new word of serverless computing.

Are you using AWS Lambda or another serverless provider? Do you have any additional tips or comments? Please add them down below to keep the discussion going.